Definition and Meaning of Performance Evaluation

The "Performance evaluation of pattern classifiers for handwritten - cse msu" refers to a systematic study focused on assessing various pattern classifiers' ability to recognize handwritten digits accurately. These classifiers are algorithms used in handwriting recognition tasks to categorize input data based on learned features. The evaluation typically includes comparison across different types such as statistical classifiers, neural classifiers, and learning vector quantization classifiers. This study helps in understanding the strengths and weaknesses of each classifier when applied to real-world handwriting data, enabling the selection of the most effective approach for specific applications.

Key Elements of the Evaluation Process

The evaluation process involves a thorough examination of several key elements:

- Classification Accuracy: The ability of each classifier to correctly identify and categorize handwritten digits.

- Sensitivity to Training Sample Size: How variation in the amount of training data affects each classifier's performance.

- Ambiguity Rejection: The capability to identify and not classify uncertain cases.

- Outlier Resistance: The robustness of the classifier in dealing with unfamiliar or irregular input.

Each of these elements is crucial for determining the overall efficacy and reliability of a handwriting recognition system.

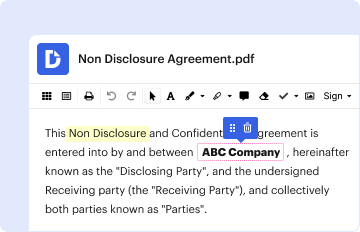

Steps to Complete the Evaluation

Conducting a comprehensive performance evaluation involves several methodical steps:

- Data Collection: Gather a large dataset of handwritten digits, such as the NIST SD19 dataset, to serve as the basis for evaluation.

- Classifier Implementation: Set up and configure various classifiers like MQDF, multilayer perceptron, radial basis function, polynomial classifier, and LVQ.

- Training and Testing: Train the classifiers on a subset of the dataset and test their performance on a separate subset.

- Performance Metrics Calculation: Calculate relevant metrics, including accuracy, precision, recall, and F1-score.

- Analysis and Comparison: Analyze results to compare classifiers' performance across different metrics and conditions.

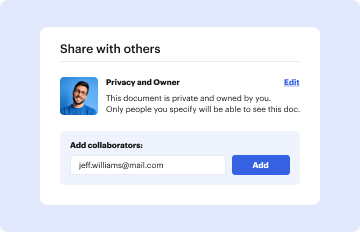

Who Typically Uses the Evaluation

The performance evaluation of pattern classifiers for handwritten digit recognition is primarily used by:

- Researchers and Academics: Those studying machine learning and pattern recognition who aim to publish findings in related fields.

- AI and ML Engineers: Professionals developing handwriting recognition systems seeking to implement the most effective algorithms.

- Educational Institutes: Universities and colleges incorporating these evaluations in curriculum for computer science and engineering courses.

Important Terms Related to the Evaluation

Certain terms are fundamental to understanding performance evaluation of handwritten pattern classifiers:

- Classifier: An algorithm that sorts data into categories based on training.

- Neural Network: A set of algorithms modeled after the human brain, used for recognizing patterns.

- Confusion Matrix: A tool used to measure the performance of classification algorithms.

- Overfitting: When a model learns the training data too well, including its noise and detail.

Technology Requirements for Evaluation

Conducting these evaluations may often require specific software and hardware setups:

- Software Tools: Machine learning frameworks such as TensorFlow or PyTorch for implementing and training models.

- Hardware Requirements: Computers with considerable processing power and memory to handle large datasets and execute complex algorithms efficiently.

- Dataset Compatibility: Ensure datasets like NIST SD19 are properly formatted and accessible to the tools used for evaluation.

Examples of Using Performance Evaluation

Practical applications and scenarios demonstrate the relevance of this type of evaluation:

- Handwriting Recognition in Banking: Improved check processing systems using high-performing classifiers for verifying handwritten check details.

- Postal Sorting Systems: Automation of sorting based on handwriting for efficient mail distribution.

- Educational Technology: Developing applications for grading handwritten exams automatically with high accuracy.

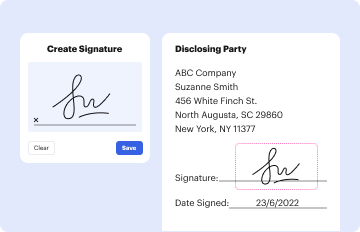

Legal Use and Compliance

Using and implementing pattern classifiers for handwritten recognition requires careful adherence to legal standards:

- Data Privacy: Ensure compliance with regulations like GDPR or CCPA when using handwritten data.

- Intellectual Property: Respect proprietary algorithms and employ open-source solutions or licensed models.

- Bias and Ethics: Address potential biases in classifiers to maintain fairness across diverse handwriting samples.

By providing a structured approach and understanding of various classifiers' potential, this performance evaluation allows users to make informed decisions about implementing effective handwriting recognition systems, ensuring efficiency and reliability in various applications.