Not all formats, such as LWP, are developed to be quickly edited. Even though many capabilities can help us edit all form formats, no one has yet created an actual all-size-fits-all solution.

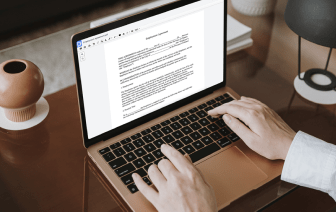

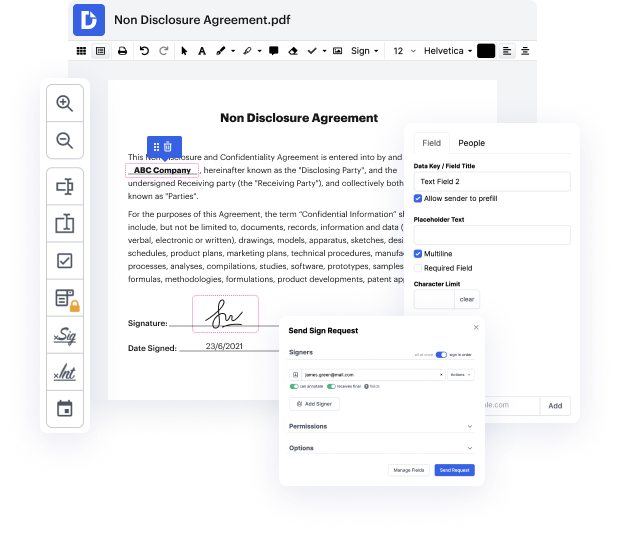

DocHub gives a easy and efficient solution for editing, taking care of, and storing papers in the most popular formats. You don't have to be a tech-savvy person to strike sample in LWP or make other changes. DocHub is powerful enough to make the process straightforward for everyone.

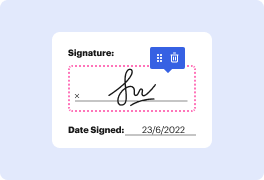

Our tool enables you to modify and tweak papers, send data back and forth, generate interactive documents for information gathering, encrypt and protect documents, and set up eSignature workflows. Additionally, you can also generate templates from papers you utilize on a regular basis.

You’ll locate plenty of other functionality inside DocHub, such as integrations that allow you to link your LWP form to a wide array of business applications.

DocHub is a straightforward, cost-effective option to handle papers and streamline workflows. It offers a wide array of capabilities, from creation to editing, eSignature services, and web document building. The application can export your paperwork in multiple formats while maintaining maximum protection and adhering to the highest information safety standards.

Give DocHub a go and see just how straightforward your editing process can be.

in todayamp;#39;s short class weamp;#39;re going to take a look at some example perl code and weamp;#39;re going to write a web crawler using perl this is going to be just a very simple piece of code thatamp;#39;s going to go to a website download the raw html iterate through that html and find the urls and retrieve those urls and store them as a file weamp;#39;re going to create a series of files and in our initial iteration weamp;#39;re going to choose just about 10 or so websites just so that we get to the end and we donamp;#39;t download everything if you want to play along at home you can of course download as many websites as you have disk space for so weamp;#39;ll choose websites at random and what weamp;#39;re going to write is a series of html files numbered 0.html1.html 2.html and so on and then a map file that contains the number and the original url so letamp;#39;s get started with the perl code so weamp;#39;re going to write a program called web crawler dot pl h