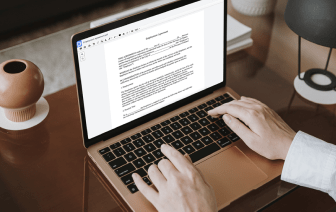

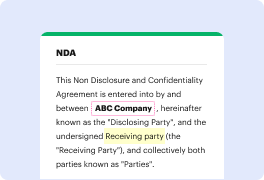

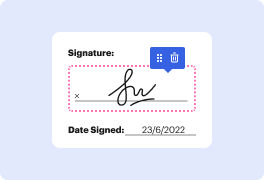

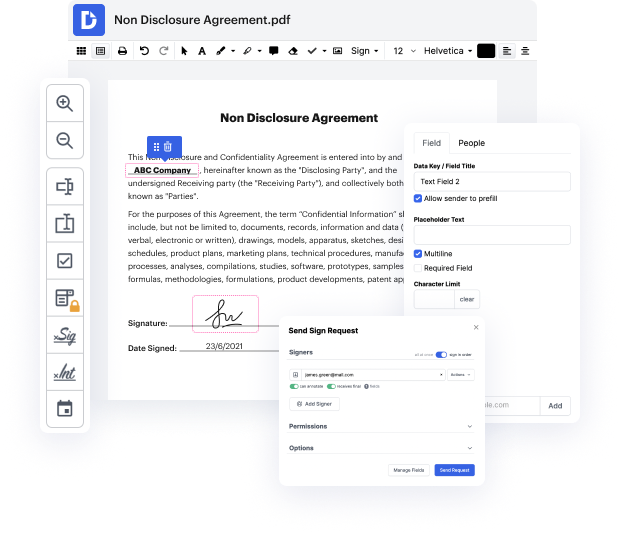

LWP may not always be the easiest with which to work. Even though many editing features are available on the market, not all give a easy tool. We developed DocHub to make editing effortless, no matter the file format. With DocHub, you can quickly and effortlessly wipe type in LWP. On top of that, DocHub offers a range of additional tools such as form creation, automation and management, industry-compliant eSignature services, and integrations.

DocHub also lets you save effort by producing form templates from paperwork that you use regularly. On top of that, you can benefit from our a wide range of integrations that allow you to connect our editor to your most used apps with ease. Such a tool makes it fast and simple to work with your documents without any slowdowns.

DocHub is a handy feature for personal and corporate use. Not only does it give a all-encompassing suite of capabilities for form generation and editing, and eSignature implementation, but it also has a range of features that prove useful for producing complex and simple workflows. Anything uploaded to our editor is stored risk-free according to leading field standards that safeguard users' data.

Make DocHub your go-to option and streamline your form-centered workflows with ease!

in todayamp;#39;s short class weamp;#39;re going to take a look at some example perl code and weamp;#39;re going to write a web crawler using perl this is going to be just a very simple piece of code thatamp;#39;s going to go to a website download the raw html iterate through that html and find the urls and retrieve those urls and store them as a file weamp;#39;re going to create a series of files and in our initial iteration weamp;#39;re going to choose just about 10 or so websites just so that we get to the end and we donamp;#39;t download everything if you want to play along at home you can of course download as many websites as you have disk space for so weamp;#39;ll choose websites at random and what weamp;#39;re going to write is a series of html files numbered 0.html1.html 2.html and so on and then a map file that contains the number and the original url so letamp;#39;s get started with the perl code so weamp;#39;re going to write a program called web crawler dot pl h