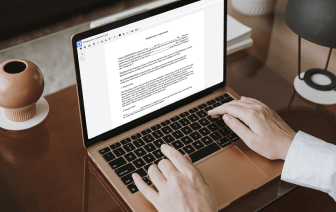

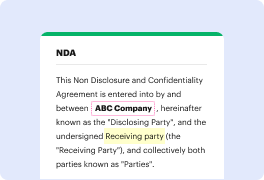

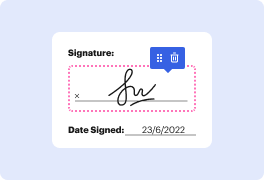

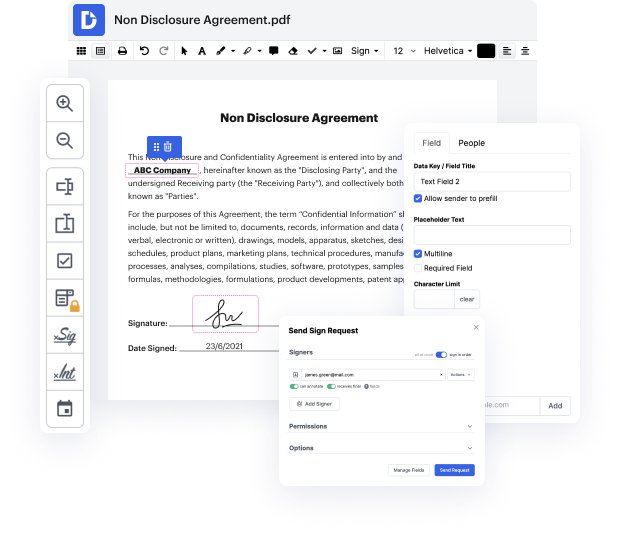

Regardless of how complex and challenging to modify your documents are, DocHub delivers an easy way to modify them. You can alter any element in your spreadsheet without effort. Whether you need to tweak a single component or the whole form, you can entrust this task to our robust solution for quick and quality outcomes.

Moreover, it makes sure that the output document is always ready to use so that you’ll be able to get on with your projects without any slowdowns. Our comprehensive group of features also includes sophisticated productivity features and a library of templates, letting you make best use of your workflows without wasting time on repetitive activities. Additionally, you can gain access to your documents from any device and incorporate DocHub with other solutions.

DocHub can take care of any of your form management activities. With a great deal of features, you can generate and export papers however you choose. Everything you export to DocHub’s editor will be stored safely for as long as you need, with strict safety and data safety frameworks in place.

Check DocHub today and make managing your paperwork easier!

hello guys welcome back to my channel So today weamp;#39;re going to learn different data cleaning processes in Excel So today weamp;#39;re going to learn how to remove duplicates so duplicates are values that be repeated twice or more and weamp;#39;re going to learn how to split column weamp;#39;re going to learn how to merge or combine a column weamp;#39;re going to learn how to um find and replace values so weamp;#39;re going to learn this data cleaning processes in Excel so stay tuned to the end of the video video and then donamp;#39;t forget to subscribe and click on the notification Bell to be notified when a video is posted bye hello guys okay so we are going to um go into the class now the first thing we are going to do today is we are going to learn how to remove duplicates remember what I said duplicate are values that appear more than once right and you want to to go ahead and do your analysis with a duplicated value so how do we go about removing the duplicat