Document generation and approval certainly are a central focus of each organization. Whether handling sizeable bulks of files or a distinct agreement, you need to stay at the top of your productiveness. Finding a perfect online platform that tackles your most common record generation and approval difficulties could result in quite a lot of work. Many online apps offer you merely a minimal list of modifying and eSignature features, some of which might be useful to handle csv formatting. A solution that handles any formatting and task might be a exceptional choice when choosing application.

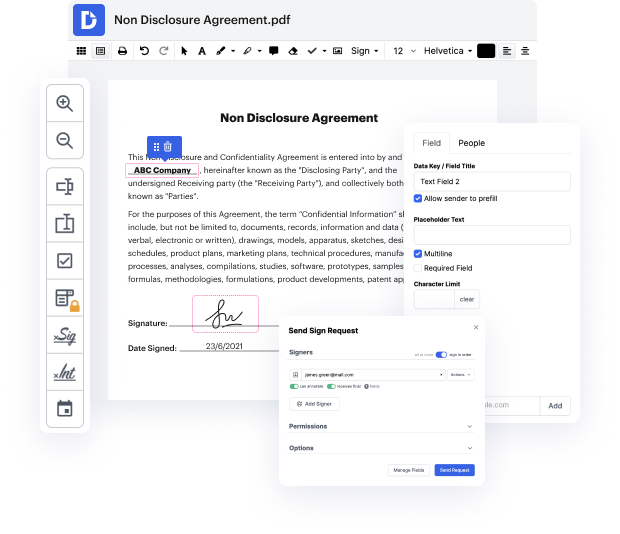

Get file managing and generation to a different level of efficiency and excellence without opting for an awkward interface or expensive subscription plan. DocHub offers you tools and features to deal efficiently with all of file types, including csv, and carry out tasks of any difficulty. Change, organize, and make reusable fillable forms without effort. Get complete freedom and flexibility to slide URL in csv anytime and securely store all your complete documents within your user profile or one of many possible incorporated cloud storage space apps.

DocHub offers loss-free editing, signature collection, and csv managing on a professional levels. You don’t need to go through tedious tutorials and spend hours and hours figuring out the software. Make top-tier safe file editing a typical process for your every day workflows.

hello everybody so as you can see today we are going to scrape a list of urls from a csv and my subscriber wants to extract the text from all of the pages from all of the sites so he says all data hes actually after all of the text how can i extract all the pages and sub pages from the list of urls in the csv ive found a method but that is only working for a home page can anyone recode and tell me how to extract full data from a website as we cant use xpath for each url i actually provided him with this originally and um with beautiful soup it worked fine not a problem the issue was was he was wanting the text from all of the pages and not just the first page so what ive done instead is other thing cannot actually come up with another idea so if youd like to see that um this is the list of urls so i dont want to show them too closely to sort of give the game away but um weve got uh 469 urls um ive actually been a bit crafty and used vim to strip o

At DocHub, your data security is our priority. We follow HIPAA, SOC2, GDPR, and other standards, so you can work on your documents with confidence.

Learn more