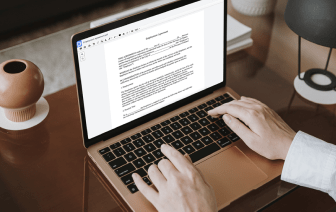

Document generation and approval certainly are a central focus of each company. Whether working with large bulks of files or a specific agreement, you need to remain at the top of your efficiency. Choosing a ideal online platform that tackles your most frequentl papers generation and approval problems might result in quite a lot of work. Numerous online platforms offer merely a restricted list of editing and eSignature features, some of which could be helpful to deal with LWP formatting. A solution that deals with any formatting and task might be a outstanding choice when choosing application.

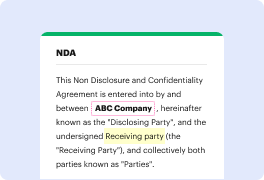

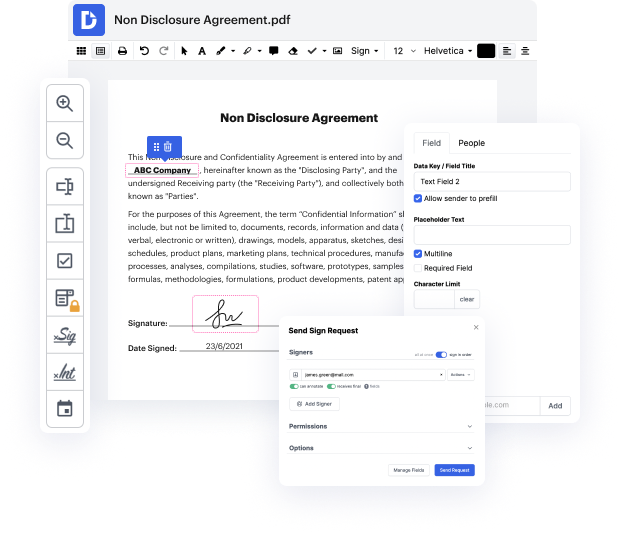

Get file managing and generation to another level of simplicity and sophistication without picking an awkward program interface or high-priced subscription options. DocHub offers you instruments and features to deal efficiently with all file types, including LWP, and execute tasks of any complexity. Edit, arrange, and produce reusable fillable forms without effort. Get complete freedom and flexibility to omit code in LWP at any time and safely store all of your complete documents within your user profile or one of many possible integrated cloud storage space platforms.

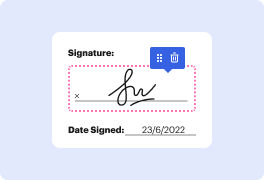

DocHub offers loss-free editing, signature collection, and LWP managing on a professional level. You don’t have to go through tedious tutorials and invest hours and hours finding out the platform. Make top-tier safe file editing a standard practice for the day-to-day workflows.

in todays short class were going to take a look at some example perl code and were going to write a web crawler using perl this is going to be just a very simple piece of code thats going to go to a website download the raw html iterate through that html and find the urls and retrieve those urls and store them as a file were going to create a series of files and in our initial iteration were going to choose just about 10 or so websites just so that we get to the end and we dont download everything if you want to play along at home you can of course download as many websites as you have disk space for so well choose websites at random and what were going to write is a series of html files numbered 0.html1.html 2.html and so on and then a map file that contains the number and the original url so lets get started with the perl code so were going to write a program called web crawler dot pl heres our web crawler were going to start as weve done before with whats called the