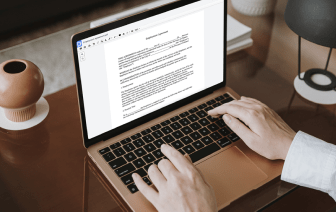

Those who work daily with different documents know very well how much productivity depends on how convenient it is to access editing instruments. When you Payment Receipt papers must be saved in a different format or incorporate complex elements, it might be challenging to deal with them using conventional text editors. A simple error in formatting might ruin the time you dedicated to link header in Payment Receipt, and such a basic job should not feel challenging.

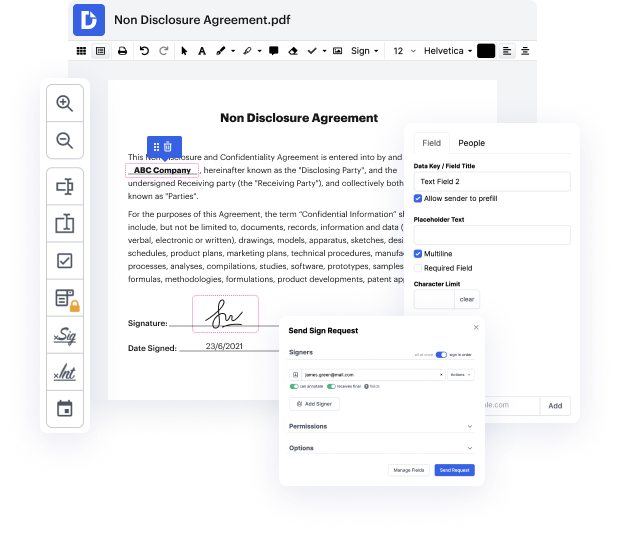

When you find a multitool like DocHub, this kind of concerns will never appear in your work. This powerful web-based editing solution will help you easily handle paperwork saved in Payment Receipt. It is simple to create, modify, share and convert your files wherever you are. All you need to use our interface is a stable internet access and a DocHub account. You can sign up within a few minutes. Here is how easy the process can be.

With a well-developed modifying solution, you will spend minimal time figuring out how it works. Start being productive the moment you open our editor with a DocHub account. We will ensure your go-to editing instruments are always available whenever you need them.

in this video we're going to be talking about http headers what they are and how we would want to use custom ones when we're web scraping so http stands for hypertext transfer protocol and it's designed to allow web browsers and web servers to talk to each other and transfer data usually html or maybe json for displaying the content of a website the client which is us initiates the request of the server and waits for the response within both the request and the response we have these headers additional text that is used to provide information about the request to help each party work out how best to serve and deal with that data the request headers are the ones that we're most interested in as web scrapers as we want our programs to seem as human-like as possible we're going to cover the four most useful headers and what values you might want to send along with them when you're web scraping so what do these headers look like well each request is categorized into a few different reques...