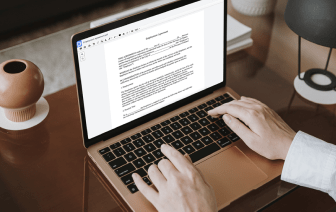

Flaws are present in every solution for editing every document type, and despite the fact that you can use many tools on the market, not all of them will fit your particular needs. DocHub makes it much simpler than ever to make and modify, and handle documents - and not just in PDF format.

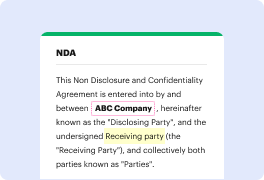

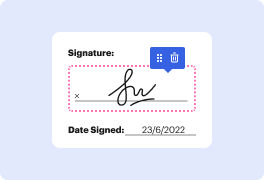

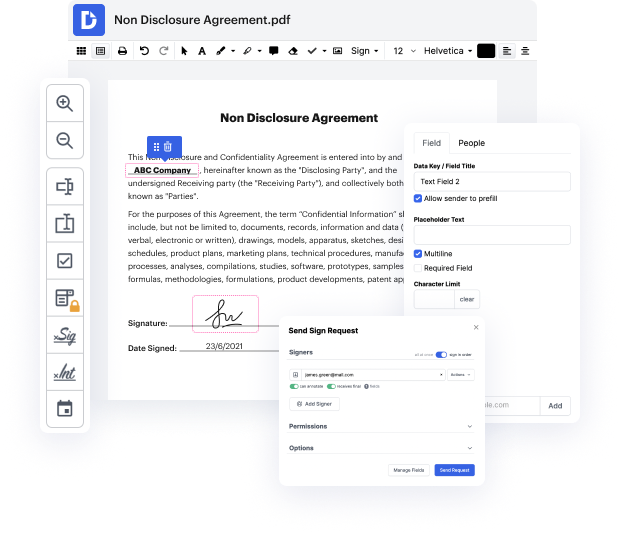

Every time you need to quickly inject space in LWP, DocHub has got you covered. You can effortlessly modify document components including text and pictures, and structure. Customize, organize, and encrypt documents, develop eSignature workflows, make fillable documents for stress-free information collection, and more. Our templates feature allows you to generate templates based on documents with which you frequently work.

Moreover, you can stay connected to your go-to productivity capabilities and CRM platforms while managing your documents.

One of the most remarkable things about utilizing DocHub is the option to manage document activities of any complexity, regardless of whether you need a quick modify or more diligent editing. It includes an all-in-one document editor, website document builder, and workflow-centered capabilities. Moreover, you can rest assured that your documents will be legally binding and adhere to all protection frameworks.

Shave some time off your tasks by leveraging DocHub's capabilities that make managing documents straightforward.

in todayamp;#39;s short class weamp;#39;re going to take a look at some example perl code and weamp;#39;re going to write a web crawler using perl this is going to be just a very simple piece of code thatamp;#39;s going to go to a website download the raw html iterate through that html and find the urls and retrieve those urls and store them as a file weamp;#39;re going to create a series of files and in our initial iteration weamp;#39;re going to choose just about 10 or so websites just so that we get to the end and we donamp;#39;t download everything if you want to play along at home you can of course download as many websites as you have disk space for so weamp;#39;ll choose websites at random and what weamp;#39;re going to write is a series of html files numbered 0.html1.html 2.html and so on and then a map file that contains the number and the original url so letamp;#39;s get started with the perl code so weamp;#39;re going to write a program called web crawler dot pl h