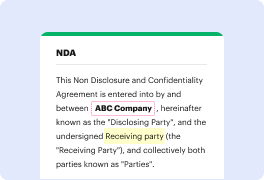

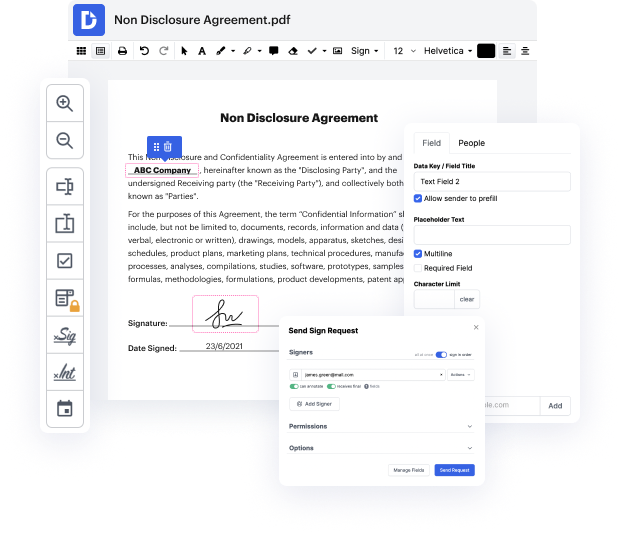

Contrary to popular belief, working on files online can be hassle-free. Sure, some file formats might seem too challenging with which to deal. But if you get the right solution, like DocHub, it's easy to modify any file with minimum resources. DocHub is your go-to solution for tasks as simple as the option to Fine-tune Label Text For Free a single file or something as daunting as dealing with a huge stack of complex paperwork.

When it comes to a tool for online file editing, there are many options out there. Yet, not all of them are powerful enough to accommodate the needs of individuals requiring minimum editing functionality or small businesses that look for more advanced features that enable them to collaborate within their document-based workflow. DocHub is a multi-purpose service that makes managing documents online more streamlined and smoother. Sign up for DocHub now!

In this tutorial, we will be using the Transformers library version 4.0 to classify toxic comments. By fine-tuning the model with the PyTorch library, we can create a model that can classify comments as toxic or threatening. The newly released version of the Transformers library is easier to use and contains implementations like Albert and Bart. Let's get started with classifying comments using this powerful library.

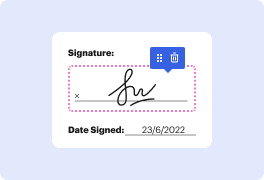

At DocHub, your data security is our priority. We follow HIPAA, SOC2, GDPR, and other standards, so you can work on your documents with confidence.

Learn more