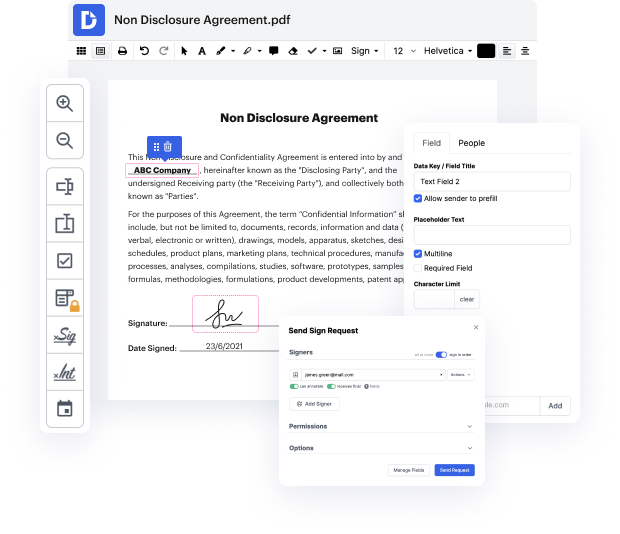

csv may not always be the easiest with which to work. Even though many editing capabilities are available on the market, not all give a simple solution. We designed DocHub to make editing effortless, no matter the file format. With DocHub, you can quickly and easily erase formula in csv. In addition to that, DocHub provides an array of other features including document generation, automation and management, industry-compliant eSignature solutions, and integrations.

DocHub also enables you to save effort by creating document templates from documents that you utilize frequently. In addition to that, you can make the most of our numerous integrations that enable you to connect our editor to your most utilized applications with ease. Such a solution makes it quick and easy to deal with your documents without any slowdowns.

DocHub is a handy feature for individual and corporate use. Not only does it give a extensive collection of features for document generation and editing, and eSignature implementation, but it also has an array of capabilities that come in handy for developing multi-level and streamlined workflows. Anything imported to our editor is kept risk-free in accordance with major industry requirements that protect users' data.

Make DocHub your go-to option and streamline your document-driven workflows with ease!

Today weamp;#39;re going to take a look at a very common task when it comes to cleaning data and itamp;#39;s also a very common interview question that you might get if youamp;#39;re applying for a data or financial analyst type of job. How can you remove duplicates in your data? Iamp;#39;m going to show you three methods, itamp;#39;s important that you understand the advantages and disadvantages of the different methods and why one of these methods might return a different result to the other ones. Letamp;#39;s take a look Okay, so I have this table with sales agent region and sales value I want to remove the duplicates that occur in this table but first of all what are the duplicates? well if we take a look at this row for example and take a look at this one, is this a duplicate? no right? because the sales value is different, but what about this one and this one? These are duplicates. What I want to happen is that every other occurrence of this line i

At DocHub, your data security is our priority. We follow HIPAA, SOC2, GDPR, and other standards, so you can work on your documents with confidence.

Learn more