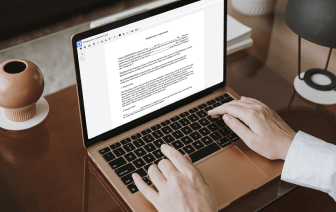

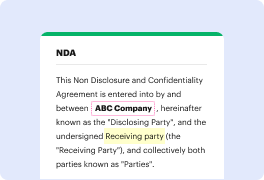

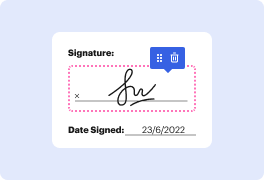

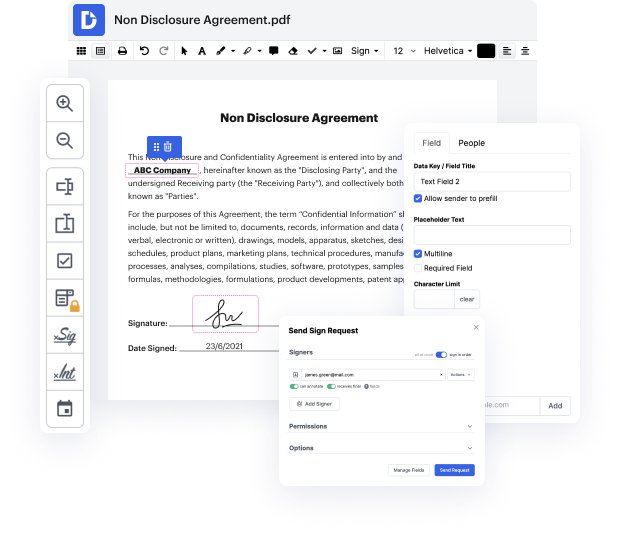

Many people find the process to embed fact in WRD rather difficult, especially if they don't regularly work with paperwork. Nonetheless, these days, you no longer have to suffer through long guides or spend hours waiting for the editing software to install. DocHub enables you to adjust documents on their web browser without setting up new applications. What's more, our powerful service provides a complete set of tools for comprehensive document management, unlike numerous other online tools. That’s right. You no longer have to export and import your forms so frequently - you can do it all in one go!

Whatever type of paperwork you need to update, the process is easy. Make the most of our professional online service with DocHub!

okay guys letamp;#39;s letamp;#39;s start I presume that you watched on video the the chapter on recurrent networks right and this is something that you are going to see also in the tutorial and I would like to show you one I think important issue or important topic that is related to the word models or to sequence models that is probably the hallmark for for our aims in general so I would like to show you what is typically known in the literature as word embeddings and let me present it in a very different way from what it is typically presented in the literature because in the literature it always appears from the perspective of NLP natural language processing I would like to show it for a very different application okay and again Iamp;#39;m not showing all the details and tricks of word embeddings because usually you can just call a few functions that will do the job but just to understand the learning problem that lies behind and this will be a segue to to the topic of basically