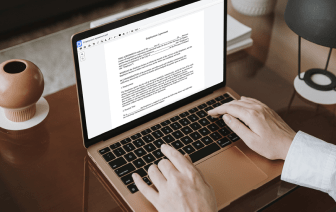

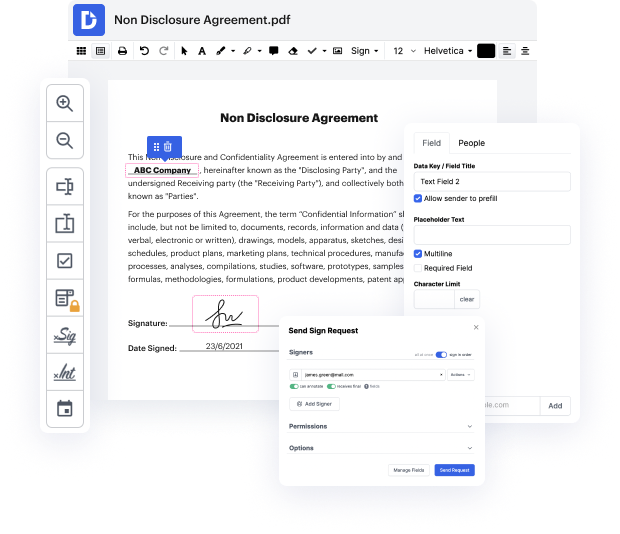

Document generation and approval certainly are a key priority of each organization. Whether dealing with large bulks of documents or a distinct contract, you have to remain at the top of your productiveness. Getting a ideal online platform that tackles your most common document creation and approval obstacles could result in quite a lot of work. A lot of online platforms offer you merely a limited list of editing and eSignature features, some of which could be useful to handle DITA formatting. A platform that handles any formatting and task might be a exceptional choice when picking software.

Get document management and creation to a different level of simplicity and excellence without choosing an difficult user interface or costly subscription options. DocHub gives you instruments and features to deal successfully with all document types, including DITA, and carry out tasks of any complexity. Modify, organize, and make reusable fillable forms without effort. Get complete freedom and flexibility to clean token in DITA anytime and securely store all of your complete files in your profile or one of many possible incorporated cloud storage platforms.

DocHub offers loss-free editing, eSignaturel collection, and DITA management on the expert level. You do not have to go through tiresome tutorials and spend countless hours figuring out the application. Make top-tier secure document editing a standard process for your day-to-day workflows.

welcome in the second section of our course in this section we see how to clean our text data so firstly well focus on that organization problem well see how to tokenize input data next well be cleaning stop words from text input next well be removing data specific words that has negative impact at the end well see how to handle white spaces we will see that theres a lot of white spaces a lot of types that could occur in the input text we will see how to handle them and this a first video in which will focus on tokenizing and also on installing proper libraries so first we will be analyzing input data then we try to tokenize our input data into tokens so this will be an array of strings will be using natural language toolkit that is a very good and well proven library in the Python environment so how tokenize works so we have a simple text like this is some text this is an input to our tokenizer and output should be this is some text having that we will split our input into our