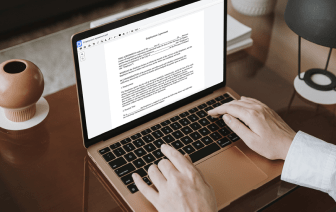

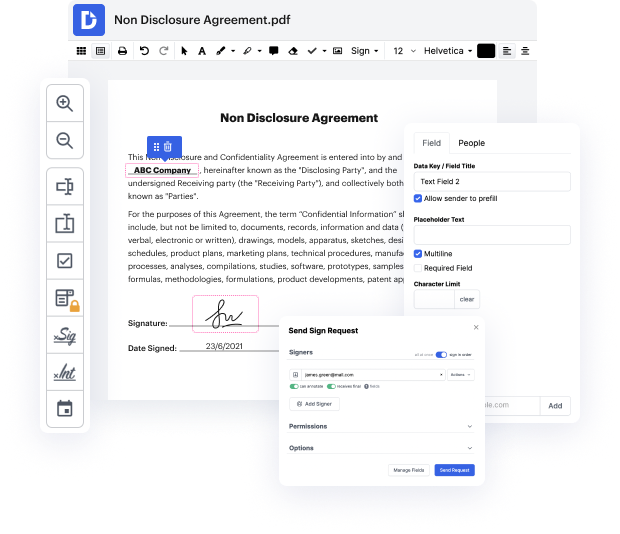

No matter how labor-intensive and challenging to change your files are, DocHub provides a straightforward way to modify them. You can alter any element in your csv with no effort. Whether you need to tweak a single component or the whole form, you can rely on our powerful tool for quick and quality results.

Additionally, it makes sure that the output file is always ready to use so that you’ll be able to get on with your tasks without any delays. Our all-purpose set of features also includes sophisticated productivity features and a catalog of templates, letting you make the most of your workflows without the need of losing time on recurring activities. On top of that, you can access your papers from any device and incorporate DocHub with other solutions.

DocHub can take care of any of your form management activities. With an abundance of features, you can create and export papers however you choose. Everything you export to DocHub’s editor will be stored securely as much time as you need, with rigid security and information protection protocols in place.

Check DocHub now and make handling your files more seamless!

up to this point weamp;#39;ve talked about vectorizing documents using bag of words approaches whether itamp;#39;s binary frequency or TF IDF in this module weamp;#39;ll go down one level and talk about how to vectorize words themselves and why thatamp;#39;s useful specifically weamp;#39;ll cover something called Static embeddings and how they can help us capture some aspects of a wordamp;#39;s meaning I underline the word static because the NLP world has moved on to more powerful contextualized embeddings which we will cover later in this course and so weamp;#39;re not going to spend too much time on static embeddings but itamp;#39;s important to understand a few Core Concepts here because they form the foundation for further material theyamp;#39;re also still very cool and can be useful for building base models letamp;#39;s start by talking about the simplest way to vectorize Words which is one hot encoding one hot encoding is a concept weamp;#39;ve already encountered whe