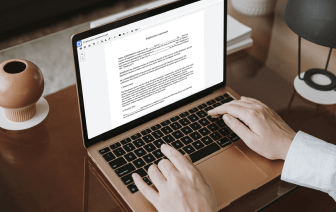

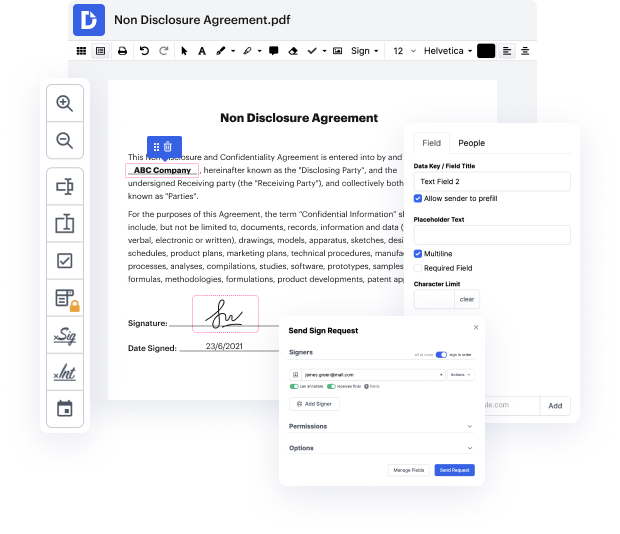

Regardless of how labor-intensive and hard to change your files are, DocHub delivers an easy way to modify them. You can alter any element in your NEIS without extra resources. Whether you need to modify a single component or the whole form, you can entrust this task to our powerful tool for quick and quality outcomes.

Moreover, it makes certain that the output file is always ready to use so that you’ll be able to get on with your tasks without any slowdowns. Our all-purpose group of capabilities also features pro productivity tools and a collection of templates, allowing you to take full advantage of your workflows without the need of losing time on routine tasks. In addition, you can gain access to your documents from any device and incorporate DocHub with other solutions.

DocHub can take care of any of your form management tasks. With an abundance of capabilities, you can generate and export papers however you want. Everything you export to DocHub’s editor will be saved securely as much time as you need, with strict security and data safety protocols in place.

Experiment with DocHub now and make managing your documents more seamless!

K nearest neighbor is a super simple supervised machine learning algorithm that can be solved for both classification and regression problem. Here its a simple two dimensional example for you to have a better understanding of this algorithm. Lets say we want to classify the given point into one of the three groups. In order to find the k nearest neighbors of the given point, we need to calculate the distance between the given point to the other points. There are many distance functions but Euclidean is the most commonly used one. Then, we need to sort the nearest neighbors of the given point by the distances in increasing order. For the classification problem, the point is classified by a vote of its neighbors, then the point is assigned to the class most common among its k nearest neighbors. K value here control the balance between overfitting and underfitting, the best value can be found with cross validation and learning curve. A small k value usually leads to low bias but high va